Concrete measures

- Establishment of the competence cluster Metrology for Artificial intelligence in Medicine (M4AIM)

- Basic research on trust in AI in medicine

- Research into methodologies and tools for the evaluation of test and training data

- Investigation of AI applications in medicine

Contributions

- Appropriate metrics for assessing AI performance, which include, in particular, robustness, explainability, and predictive reliability

- Reference datasets for assessing the quality of AI

- “Good practice” examples for the assessment of large datasets in terms of uncertainty, representativeness, comparability

- Further developed measurement methods and measurement data evaluation through the use of AI

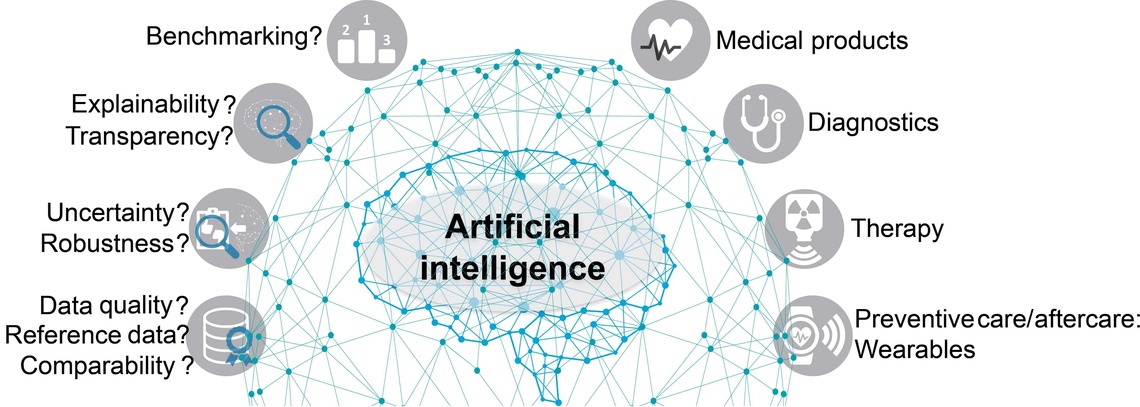

For AI systems to be considered trustworthy, they must meet several quality criteria.

Their behavior must be explainable to ensure that they make their predictions for the application based on relevant information in the data.

In medical technology, robustness and generalisability of AI methods play a major role, i.e., the case where input data deviates from the data used to train the method. This is especially true when certain features are not reflected in the training data.

Along with the AI’s predictions, its uncertainty must also be available. Inherent limitations of the AI system and data quality application contexts deviating from training and test conditions must be taken into account.

The pilot project will develop appropriate metrics to assess AI performance, taking into account robustness, explainability, and predictive reliability.

Importantly, analogous to predictive quality, approaches to estimating uncertainty or providing explanations must themselves be rigorously validated against benchmarks. Within the framework of M4AIM, corresponding benchmarks and metrics for the evaluation of “explainable AI” are currently being developed.

AI methods train their ability to interpret unknown input data using reference datasets. The quality of AI methods is therefore largely based on the quality of this training data. Ensuring this requires careful selection and assessment of the data and its enrichment with semantic information and other metadata. The training data must also be comparable with each other and representative of the targeted use cases.

Another aspect is data protection. Clinical routine data in particular cannot be made accessible to the professional public without more ado. One object of active research is therefore the generation of synthetic reference data using numerical simulation as well as generative modeling with machine learning methods. Within the framework of M4AIM, this approach is currently being tested on data from intensive care units.

Dedicated test datasets are essential to validate and test AI applications. They, therefore, play a prominent role in the conformity assessment of AI applications.

This results in complex challenges for the QI:

- Development and validation of methodologies and tools for the assessment of test and training data

- Recommendations for annotation rules and metadata (units, uncertainties, measurement methods) in selected application areas

- Norms and standards for quantifiable and testable criteria for data quality

- Qualification of testing bodies to cover testing needs (“digitisation of people”)